This project looks at ways to automatically extract editorial metadata (such as tone, language and topics) about web content, making it easier to find the right content for the right audience.

Project from 2014 - 2017

What we've done

Given the abundance of content online – both published by the BBC and present on the world-wide web — how can we curate the best content for the right audience?

Editors at the BBC - whether they make radio programmes, TV shows or edit websites - make decisions on a daily basis as to the kinds of content they want to include. Those decisions take a lot of things into account: the audience first and foremost, the timeliness (why this story? why now?), the quality of the idea or content, and the BBC’s own guidelines.

We set out to discover if we could automate those editorial decisions, or at least make it easier for BBC editors to find ideas from the vast wealth of the web and the archives of BBC content.

Why it matters

Curation of content, the craft of selecting a small set from the overwhelming abundance of choice, is increasingly becoming a major mode of discovery, alongside searching and browsing. The BBC has a long history of curating content - DJs selecting the music to be played through a radio programme, for example, but we wanted to explore how to better curate online content.

Through the BBC home page team for instance, we are already looking at how we can guide our audience across the hundreds of thousands of stories, guides, media and informational content published on our web platform. We in BBC R&D were especially interested in looking at how to help our online editors find and link to great content published by others, be it a local news story, a great post by a gifted blogger or a gem of a page on the web site of a major museum —something the BBC is committed to do better.

In order to answer this question, we have been looking at what would be needed to efficiently find and curate content to inform, educate and entertain. By prototyping and testing curation tools and methods to package and deliver curated sets of content, we were able to better understand how to help our researchers, editors and curators find the right content —whatever its origin— for the right audience.

We then developed an experimental system which allows us to index, analyse and query web content at scale. This is a similar mechanism to how search engines typically work, but whereas they typically provide results based on search terms, our tool set is able to interrogate content according to BBC editorial considerations such as its tone (whether it is serious or funny), sentiment, publisher, length and how easy it is to read.

Our goals

Some of the long-term goals of this project include:

- Creating new tools and technology to help our editors, researchers find and curate the right web content for the right audience - whether it is a definitive selection on any given topic from the hundreds of thousands of web pages and articles published on the BBC web site through the years, or great relevant content from elsewhere on the web

- Create novel technology, or adopt state-of-the art algorithms to create a unique layer of understanding about the wealth of textual content on the web - as a complement to the technology for audio/visual analysis developed in project COMMA

How it works

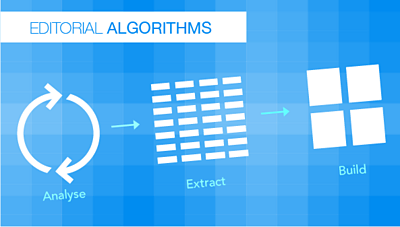

At the heart of the experimental system created through this project is a scalable pipeline for the ingestion and analysis of online textual content.

Once a source is added to the system (via a syndication feed such as RSS or Atom, or an API), our system then indexes the content from that feed according to various parameters including tone, sentiment, time to read, readability, timeliness etc., as well as knowledge about people, places and topics mentioned in the text.

Some of these editorial parameters are extracted using standard algorithms (such as the Flesch-Kincaid readability test), others use our in-house language processing technology, and others still are built on experimental machine learning algorithms.

This metadata can then be interrogated by queries such as “give me results about this subject that are long and difficult to read”, or “give me an article that is more light in tone than this one” or "give me all the recent content you can find about Australian politics".

Outcomes

The project officially ended at the end of March 2017. While there were many outcomes, including a number of talks, presentations, many prototypes, countless reports on user research - the main output of this project is an internal platform for the aggregation and analysis of web content, and a series of tools built around it.

We also published a series of blog posts about the genesis, development and outcomes of this project:

- Understanding Editorial Decisions is a recollection of how our R&D team observed and learned from editorial expertise around the BBC and started wondering whether some of the knowledge needed for curation of content could be translated into automation and technology.

- Unpicking Web Metadata follows up with an exploration of the kind of information about web content which can be gleaned, either directly or indirectly. We ask the question: question: assuming we want to curate “the best of the internet” on a daily basis, how much of these features were already available for us to use in web metadata, and how much would we have to compute ourselves?

- Using Algorithms to Understand Content gets more technical and looks at our attempts at teaching algorithms (including Machine Learning algorithms) to understand not only what web content is about, but also more complex features such as tone, subjectivity and even humour.

Related links

- Tweet This - Share on Facebook

- BBC R&D - IP Studio: Lightweight Live

- BBC R&D - Compositing and Mixing Video in the Browser

- BBC R&D - Nearly Live Production

- BBC R&D - AI Production

- BBC R&D - Data Science Research Partnership

- BBC R&D - The Business of Bots

- BBC R&D - Using Algorithms to Understand Content

- BBC R&D - Artificial Intelligence In Broadcasting

- BBC R&D - COMMA

- BBC R&D - Content Analysis Toolkit

- BBC News Labs - Bots

- BBC Academy - What does AI mean for the BBC?

- BBC Academy - AI at the BBC: Hi-tech hopes and sci-fi fears

- BBC R&D - Natural Language Processing

- BBC R&D - CODAM

- BBC iWonder - 15 Key Moments in the Story of Artificial Intelligence

- Wikipedia - Artificial Intelligence

- BBC News - Artificial Intelligence

- BBC News - Intelligent Machines

Project Team

-

Internet Research and Future Services section

The Internet Research and Future Services section is an interdisciplinary team of researchers, technologists, designers, and data scientists who carry out original research to solve problems for the BBC. Our work focuses on the intersection of audience needs and public service values, with digital media and machine learning. We develop research insights, prototypes and systems using experimental approaches and emerging technologies.

Topics

Search by Tag:

- Tagged with Projects Projects

- Tagged with Metadata Metadata

- Tagged with Internet Internet

- Tagged with Archives Archives

- Tagged with Content Discovery Content Discovery

- Tagged with Artificial Intelligence and Machine Learning Artificial Intelligence and Machine Learning

- Tagged with Internet Research and Future Services Internet Research and Future Services